HYPERNYM

+ MongoDB + Voyage: Embedding Intelligence

HYPERNYM

+ MongoDB + Voyage: Embedding Intelligence

For MongoDB Stakeholders

Hypernym's proprietary processes create volumetric slices of your data, with each slice containing elements (already sorted!) by LLM-perceived importance. Measure twice, cut once: Trust Voyage + Hypernym Eval Team.

For Technical Teams

Hypernym extracts a semantic supercategory (6-word hypernym) from each mega-chunk, then extracts N elements with respect to that category. The LLM naturally returns elements in relational priority order. Domain embeddings achieve 81% correlation with fact preservation versus 78% for the best general models - telling us where to slice. Request our technical whitepaper for implementation details.

Why Now

By combining Hypernym's relevance-sorted compression with Voyage's domain embeddings, MongoDB can guarantee customers capture the most critical information regardless of document size - making context window limitations irrelevant.

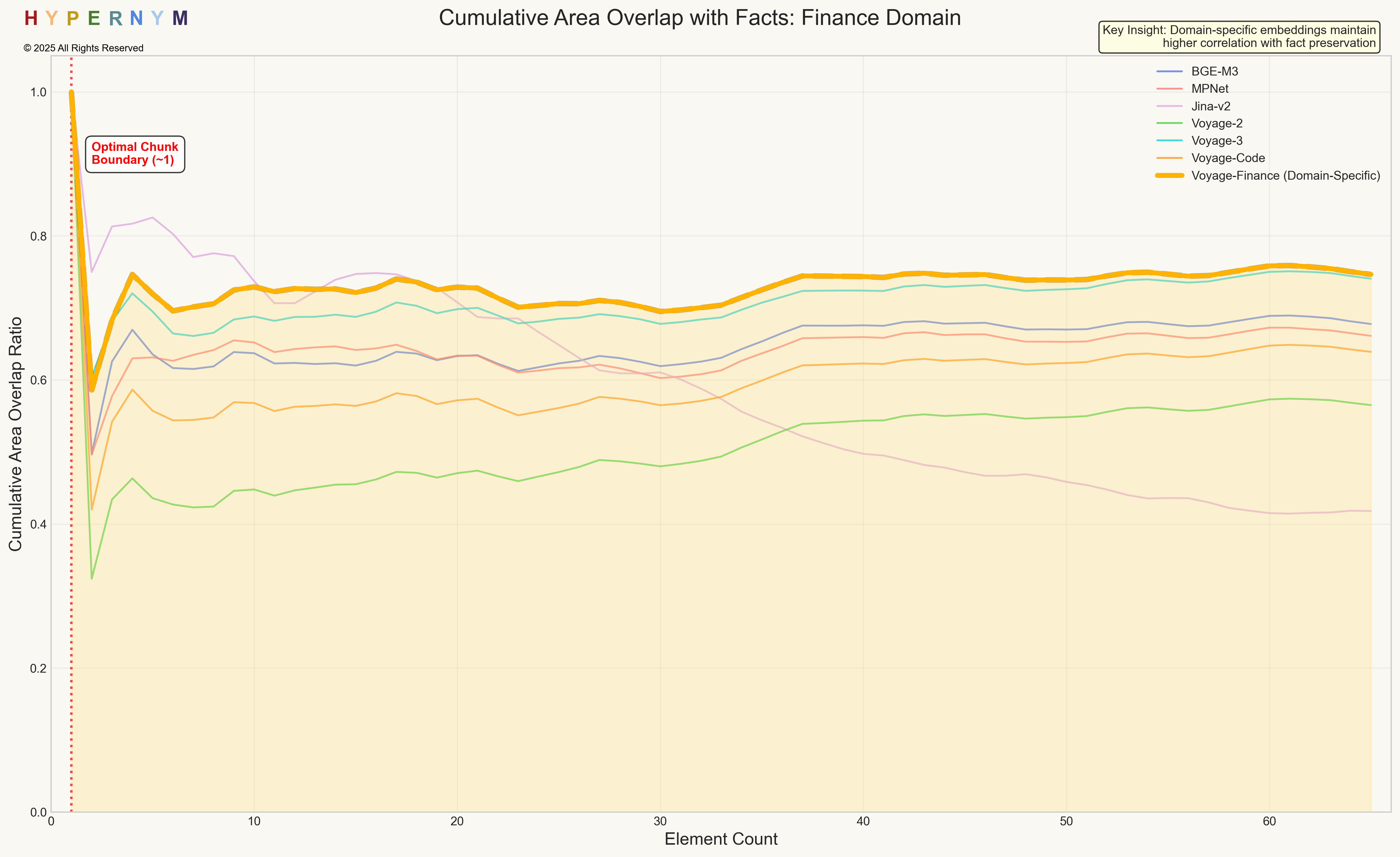

Cumulative Area Overlap Analysis

Shows how domain-specific embeddings maintain alignment with information content as element count increases, using cumulative area overlap to measure the shared space between model predictions and actual fact preservation.

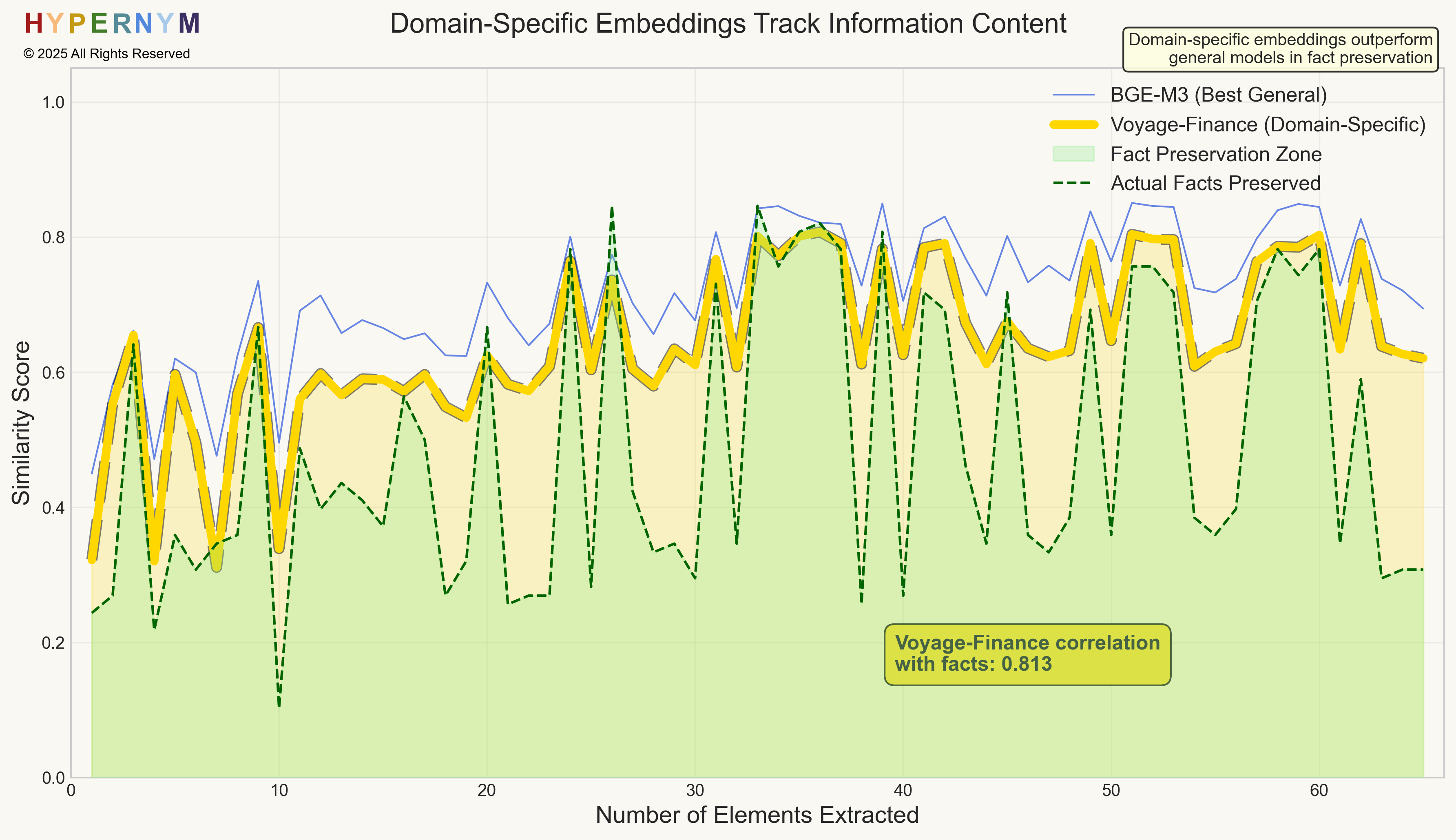

Direct Comparison - Domain vs General

Simplified view highlighting the difference between Voyage-Finance (81% correlation) and BGE-M3 (78% correlation) in tracking actual information preservation patterns.